The Professional Path: How AWS Solution Architects Approach Cloud Challenges

I’ve been using the cloud, predominantly AWS, for around 10 years now and hold the AWS Solution Architect Professional Certification being a certified AWS solution architect for over 5 years. In 2018, I got my SA-A badge and my SA-Pro badge in 2021. Further, I had the pleasure to work with AWS solution architects over the past years. This was both as a CTO for larger businesses as well as for startups. As a consultant and mentor, I helped development teams make the most out of the AWS services.

The path to becoming a professional with AWS solution architect is almost identical for all people. Most beginners start off launching resources, predominantly virtual machines, through the AWS Management Console. Professionals on the other hand hardly use the console for anything and rely on CloudFormation or CDK. Let’s look at how professionals use AWS and how their approach differs to that of beginners and intermediates.

Design for AWS solution Architect specifically

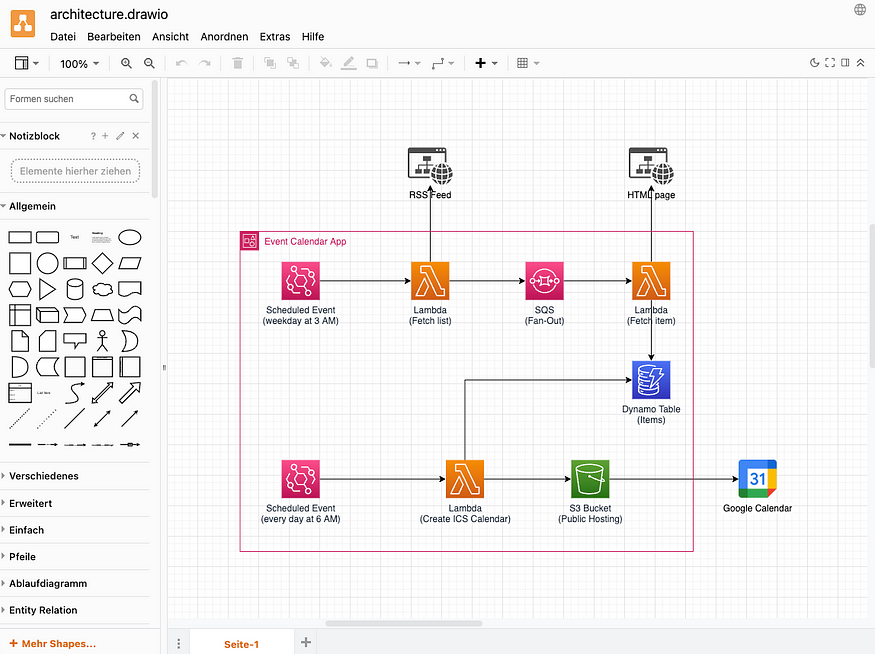

Most developers do not consider infrastructure when designing their applications. They build and design applications to be portable across multiple platforms even though their applications will never run on any other platform than AWS. Professional architects on the other hand will design the application specifically for AWS, leveraging the platforms full potential. A professional AWS solution architect will start off designing the application with a diagram and tools such as draw.io.

The example in the diagram shows a simple creation of an ics-calendar file from a given data source to be imported into any Google Calendar. A beginner may write an application using a Docker container or any VM that runs cronjobs to perform the necessary actions, store the final file on disk and then serve it through a webserver like nginx or apache on the same box. Intermediate users may split the jobs up to several containers and may even use native AWS services like S3.

For professional AWS solution architects, the challenge is relatively simple: it’s a simple event-driven application using EventBridge, triggering Lambda with a Fan-Out architecture through SQS, storage in DynamoDB and serving the contents through S3, maybe with a CloudFront distribution in front of the S3 bucket, if necessary.

Automatically provision and decommission

Beginners and intermediates tend to use the Management Console as it seamlessly allows to quickly deploy resources. This is a huge no-go for any AWS professional. In almost all cases, creating resources through the console results in a graveyard of abandoned resources where users loose oversight over what is deployed where and for what reason.

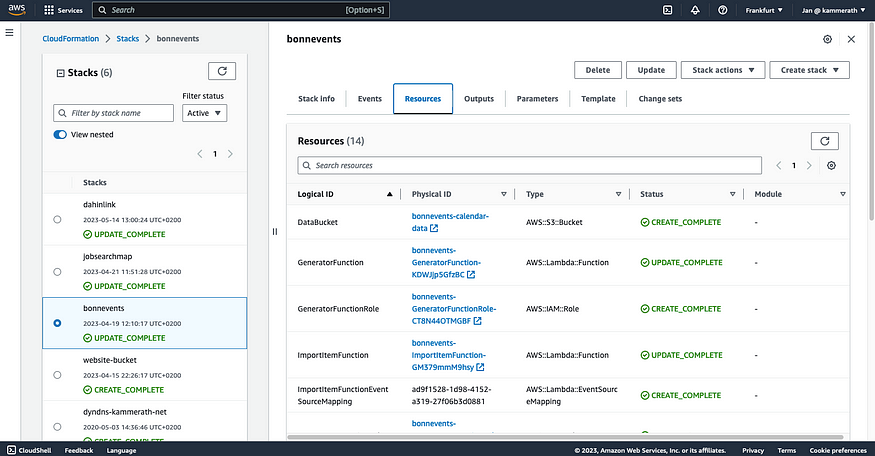

An AWS Pro will even use CloudFormation for deploying a single S3 bucket, a single IAM user or role. While this sounds overkill to beginners, using CloudFormation allows to version control the stack and provides auditability for the resources. Following the best practices for code management, any change to infrastructure will have a ticket attached, is auditable and can be reviewed by non-technical auditors. If a bucket policy changes, anyone will know by whom, why, where and who initially requested the change for what reason.

AWSTemplateFormatVersion: 2010-09-09

Transform: AWS::Serverless-2016-10-31

Description: Events Calendar

Parameters:

ImportQueueName:

Description: "Name of the queue to orchestrate imports"

Type: String

Default: "calendar-event-import-queue"

LocalEventTableName:

Description: "Name of the table with the events"

Type: String

Default: "bonnevents"

DataBucketName:

Description: "Name of the bucket with the data"

Type: String

Default: "bonnevents-calendar-data"

Resources:

DataBucket:

Type: AWS::S3::Bucket

Properties:

BucketName: !Ref DataBucketName

AccessControl: PublicRead

LocalEventTable:

Type: AWS::DynamoDB::Table

Properties:

TableName: !Ref LocalEventTableName

BillingMode: PAY_PER_REQUEST

AttributeDefinitions:

- AttributeName: "start"

AttributeType: N

- AttributeName: "title"

AttributeType: S

KeySchema:

- AttributeName: "start"

KeyType: HASH

- AttributeName: "title"

KeyType: RANGE

ScheduledImportQueue:

Type: AWS::SQS::Queue

Properties:

QueueName: !Ref ImportQueueName

DelaySeconds: 0

VisibilityTimeout: 90

ScheduledImportFunction:

Type: AWS::Serverless::Function

DependsOn:

- ScheduledImportQueue

Properties:

CodeUri: ./fetch-list/

Handler: app.lambdaHandler

Runtime: nodejs18.x

MemorySize: 128

Timeout: 90

Policies:

- SQSSendMessagePolicy:

QueueName: !Ref ImportQueueName

Environment:

Variables:

QUEUE_URL: !Ref ScheduledImportQueue

ScheduledImportEvent:

DependsOn:

- ScheduledImportFunction

Type: AWS::Events::Rule

Properties:

Description: "Import all events"

ScheduleExpression: "cron(0 3 * * ? *)"

State: 'ENABLED'

Targets:

- Arn: !GetAtt ScheduledImportFunction.Arn

Id: "LambdaScheduledImportEvent"

ScheduledImportEventInvokePermission:

DependsOn:

- ScheduledImportEvent

Type: AWS::Lambda::Permission

Properties:

FunctionName: !GetAtt ScheduledImportFunction.Arn

Action: 'lambda:InvokeFunction'

Principal: 'events.amazonaws.com'

SourceArn: !GetAtt ScheduledImportEvent.Arn

ImportItemFunction:

Type: AWS::Serverless::Function

Properties:

CodeUri: ./fetch-item/

Handler: app.lambdaHandler

Runtime: nodejs18.x

MemorySize: 1024

Timeout: 90

Policies:

- SQSPollerPolicy:

QueueName: !Ref ImportQueueName

- DynamoDBCrudPolicy:

TableName: !Ref LocalEventTableName

Environment:

Variables:

TABLE_NAME: !Ref LocalEventTableName

ImportItemFunctionEventSourceMapping:

Type: AWS::Lambda::EventSourceMapping

Properties:

BatchSize: 1

Enabled: true

EventSourceArn: !GetAtt ScheduledImportQueue.Arn

FunctionName: !GetAtt ImportItemFunction.Arn

ScheduledGeneratorEvent:

DependsOn:

- GeneratorFunction

Type: AWS::Events::Rule

Properties:

Description: "Generate the calendar file"

ScheduleExpression: "cron(0 6 * * ? *)"

State: 'ENABLED'

Targets:

- Arn: !GetAtt GeneratorFunction.Arn

Id: "LambdaScheduledGeneratorEvent"

ScheduledGeneratorEventInvokePermission:

DependsOn:

- ScheduledGeneratorEvent

Type: AWS::Lambda::Permission

Properties:

FunctionName: !GetAtt GeneratorFunction.Arn

Action: 'lambda:InvokeFunction'

Principal: 'events.amazonaws.com'

SourceArn: !GetAtt ScheduledGeneratorEvent.Arn

GeneratorFunction:

Type: AWS::Serverless::Function

Properties:

CodeUri: ./generate-calendar/

Handler: app.lambdaHandler

Runtime: nodejs18.x

MemorySize: 1024

Timeout: 90

Policies:

- DynamoDBCrudPolicy:

TableName: !Ref LocalEventTableName

- S3CrudPolicy:

BucketName: !Ref DataBucketName

Environment:

Variables:

TABLE_NAME: !Ref LocalEventTableName

BUCKET_NAME: !Ref DataBucketName

The above code is used with AWS SAM (Serverless Application Model) which is a further abstraction on top of AWS CloudFormation, but almost identical. Using CloudFormation or SAM allows to define all required resources into a single or multiple template files. Configuration is done through parameters in the template. Deploying this template is as easy as executing the CLI command for it.

# easily deploy the template to AWS using SAM

aws sam deploy

Alternatively CloudFormation templates (or rather stacks) can be deployed using the AWS CLI or the console. Any changes in the template can thus be traced using the history in version control or CloudFormation. The general rule of thumb for AWS Professionals is: “never provision or decommission anything in the console”. Exception, of course, being the CloudFormation console itself. This statement often meets most of the resistance from beginners and intermediates.

There’s nothing wrong trying resources using the Management Console and rebuilding them with CloudFormation. However, Professionals will not dig through the console, but rather exclusively work with the CloudFormation documentation. Looking at an example like the DynamoDB CloudFormation documentation gives much more insight into the capabilities and limitations than fiddling around in the console does.

When to use what: CloudFormation, SAM, CDK and SDK

A question often asked is whether to use SAM, CloudFormation, CDK or the SDK. The correct answer is “it depends”. While technically these tools and services are interchangeable, each serves specific purposes. The general rule of thumb is: CloudFormation and SAM are the right approach. If you have a fixed set of resources that your application requires, you define them with a CloudFormation template. If your application is relatively simple and purely serverless, SAM is a simpler alternative.

The CDK (Cloud Development Kit) allows you write code that results in CloudFormation templates which are then deployed. The CDK is helpful if your resources are provisioned during deployment, but the dyanmic nature of the resource creation requirements cannot be fulfilled using CloudFormation stack sets and their features. This is the case when the deployed resources depend on dynamic external factors or systems. However, the main reason intermediate users prefer CDK over CloudFormation is that they’re more comfortable writing their stacks in their preferred programming language rather than writing YAML or JSON templates for CloudFormation stacks.

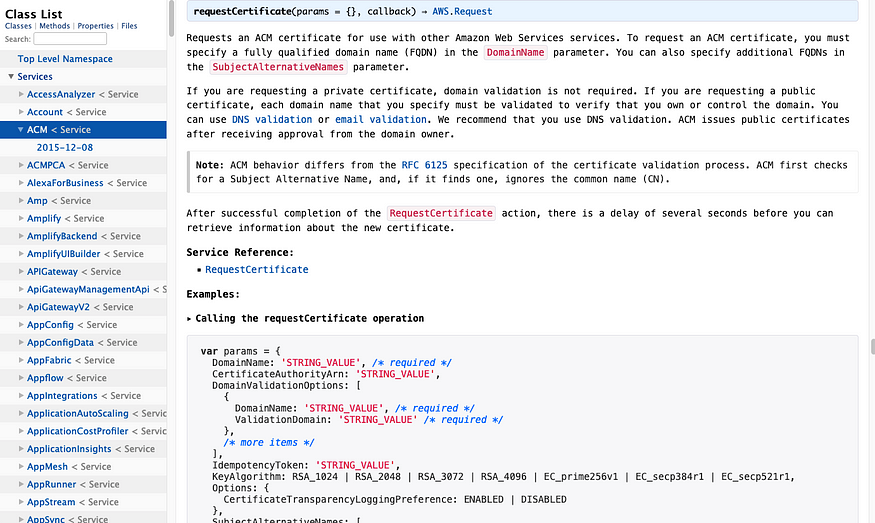

The AWS SDK (Software Development Kit) is an absolute necessity when you interact with any native AWS service (e.g. S3, DynamoDB, SQS). You use the SDK for any interaction with AWS services during the runtime of your application. It immediately executes any method with any AWS services and thus provides no rollback mechanism as compared to CDK, CloudFormation or SAM. If your user requires you to be able to create an S3 bucket, a Dynamo table, an ACM certificate or a CloudFront distribution on the fly, your application will use the SDK for that.

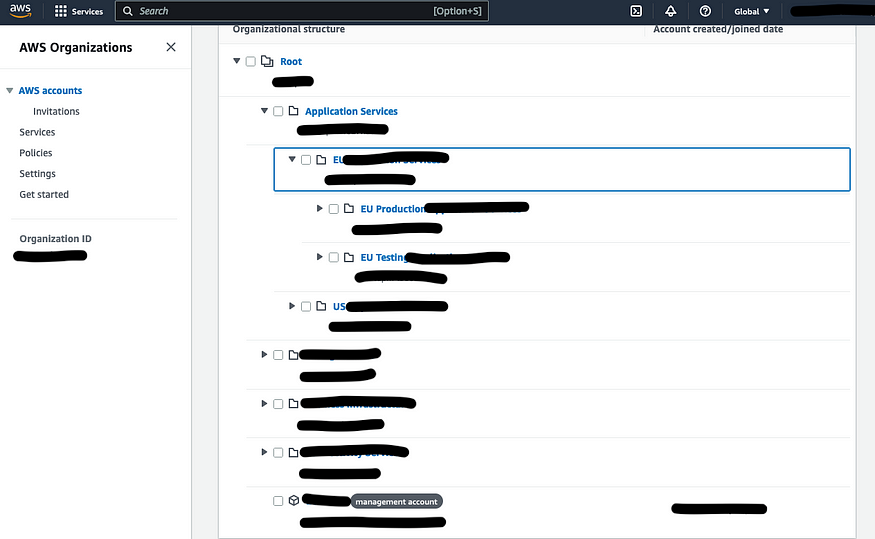

Multiple accounts in AWS organizations

Beginners and intermediates without a certification from AWS, will most likely never use AWS Organizations. Structuring your AWS accounts right from the beginning is one of the most important parts, especially when you manage a larger organization. Most beginners and intermediates only use a single AWS accounts and I have seen account monstrosities with 100+ VMs for different purposes all packed in a single account.

That’s also why Organizations is such a critical part in the AWS Solution Architect Professional Certification. Regardless of whether you want such as certification or not, you will definitely have to think about the account structure by reading “Best practices for multi-account management”.

Nothing screams “beginner” more than organizational AWS accounts regularly accessed through IAM users. Any professional will connect the businesses’ existing IAM (e.g. Azure AAD) to AWS SSO to centrally control and monitor access to AWS accounts. This will significantly strengthen the security of the AWS accounts and make it easier to manage permissions across the various accounts and the organization.

Implement security by design

What’s the point of restricting developer restrictions in a playground account when your applications got full access to services or resources? Most AWS security issues are poorly protected EC2 instances, public S3 buckets and stolen access keys. The temporary access keys with AWS SSO expire, so they can hardly get lost or stolen and can’t cause harm over weeks. S3 is becoming increasingly stricter with public buckets and EC2 instances shall only be accessed using the SSH keys and a Security Group that limits SSH access to your IP address only.

EC2 instances also shall never have access keys, but attached roles. It is absolutely mindblowing and screams “amateur!” when an EC2 instance locally stores AWS access credentials. EC2, Lambda and all other containers can have their own roles assigned. Hence, it is a massive No-Go to have the machines store credentials. Any Non-AWS credentials shall be stored in AWS Secrets Manager. These are basic principles that are not debateable with any AWS Professional. There are also dozens of very strong reasons why you shall not SSH into an instance of an Auto Scaling Group in production use.

# Example CloudFormation Template for an IAM Pipeline User

# for an external CI/CD pipe (Bitbucket or Github) that can

# only use S3, Lambda und CodeDeploy

AWSTemplateFormatVersion: 2010-09-09

Description: Pipeline IAM User

Resources:

PipelineUser:

Type: AWS::IAM::User

Properties:

Path: "/"

UserName: "CIPipeline"

ManagedPolicyArns:

# these policies could be restricted further

# based on the security requirements of the account

- 'arn:aws:iam::aws:policy/AmazonS3FullAccess'

- 'arn:aws:iam::aws:policy/AWSLambda_FullAccess'

- 'arn:aws:iam::aws:policy/AWSCodeDeployFullAccess'

PipelineAccessKey:

Type: AWS::IAM::AccessKey

Properties:

UserName: !Ref PipelineUser

Outputs:

AWSAccessKeyId:

Description: "AWS access key id for the pipeline"

Value: !Ref PipelineAccessKey

AWSSecretAccessKey:

Description: "AWS secret access key for the pipeline"

Value: !GetAtt PipelineAccessKey.SecretAccessKey

Not to mention that the roles attached to your compute instances or any other service are all defined in your CloudFormation template so that changes to these roles can be audited and possible security issues addresses within that template. There are many more recommendations and any professional will know that reading the AWS Well Architected Framework resources is an absolute must.

Highly available multi-AZ and multi-region

No EC2 instance shall be left alone. In production deployments, an Auto Scaling Group and Load Balancer defined with CloudFormation is the standard for any AWS Pro when it comes to 3-Tier Web-Apps. The defined infrastructure will always cater for a total collapse of the application, the underlying infrastructure and the availability zone. If the application requires availability beyond 99%, a multi-region approach is also used.

RTO (Recovery Time Objective) and RPO (Recovery Point Objective) are KPIs that a professional AWS solution architect, developer and manager will always have in mind. Most apps managed by AWS Pros have uptimes with an annual availability performance beyond 99%, meaning their total downtime is often less than 3 hours per year and these 3 hours are likely larger planned maintenance upgrades requiring AWS infrastructure resources to be decommissioned and reprovisioned.

High availability, redundancy and elasticity is what makes AWS services unique and what is the main benefit of AWS in general. People who complain about the cost on AWS often compare AWS services with hosting companies that do not provide high availability, reliability, redundancy and elasticity. The financial benefit of AWS comes with the TCO (Total Cost of Ownership) calculation factoring in the human cost and the business impact of high availability. AWS Professionals with years of experience will never deploy production systems without high availability, reliability, redundancy and elasticity.

Knowing the important AWS services

Most users tend to use the AWS services that they are most comfortable with. However that approach often leads to using services for specific use cases that these services may not be ideal for. An AWS Pro will know almost all AWS services by their name and what their intended use case is. A Pro will pick the services that are best suited for the requirements — that’s also why picking the right service for the task is the main challenge in the AWS certification questions.

The most important services are S3, DynamoDB, Kinesis, EC2, VPC, RDS, Lambda, CloudFormation, CloudFront, API Gateway, Route 53, Certificate Manager, Secrets Manager, SQS, SNS, EventBridge, CodeDeploy, CloudWatch, CloudTrail, Trusted Advisor, Organizations, SSO, KMS and Billing. The first thing an AWS Pro does in a new account: set up MFA for all users, the billing alerts and billing warnings.

If someone comes around and requests storing highly sensitive data, a professional AWS solution architect immediately knows: that’s a job for KMS or even CloudHSM. In order to be able to draft the best possible architecture, an architect needs to know the available building blocks and when to apply what. If there is a potential services the architect may find suitable, reading through the CloudFormation specs, AWS presentation videos and documentation of that service is the first step before trying the service.

How you become an AWS Pro

There are a number of resources for learning how to use AWS properly. The first choice is certainly AWS Training. I always loved the courses from A Cloud Guru and I personally started with these in the early days of A Cloud Guru, before they got acquired. However, the numerous free AWS resources are absolutely wonderful. Especially those directly from AWS. Don’t try to outsmart all of the other people that already went through the journey of becoming an Certified AWS Solution Architect Professional like me. Follow the same path and go through the learning curve.

For me, learning AWS services and going through the certifications was the most rewarding and most fun learning experience of my adult career life so far. If you’re really interested in Cloud Computing, I can highly recommend approaching a certification.